I see. Thank you very much. I’ll keep that in mind for the future (but see my remark below after the next citation).

However, the problem here is that my input files (that I would like to concatenate) are M2TS, which mkvinfo naturally doesn’t process. This probably means that at first every M2TS file must be converted to MKV on its own. Afterwards, I can build the set of all checksums from the individual input files, then build the set of all checksums from my already existing final MKV file, and then check whether both sets are identical.

So it’s a bit of work, but it surely is very reliable and saves me from manually searching for frames in mkvinfos’s output. Thanks again for the tip!

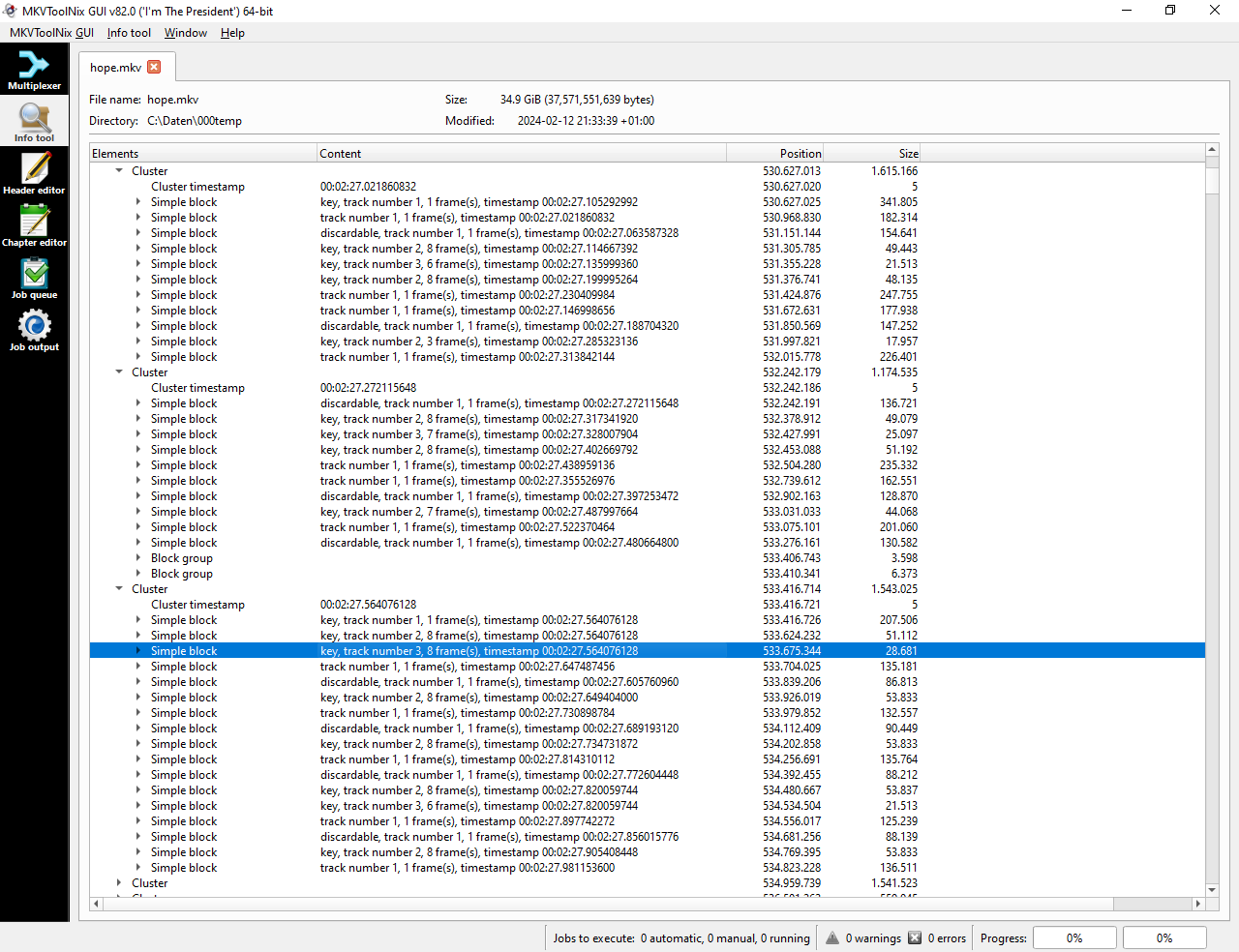

Silly me! My observation was wrong, and mkvmerge works as intended. The attached screenshot shows my embarassing mistake.

The third cluster denotes a new M2TS input file. We can see that the first frame of all audio streams has the same timestamp as the cluster itself; the same is true for the video stream. This actually is what I wanted to achieve with the help of the external timestamp files.

However, in the cluster before (the second cluster in the screenshot), the lastest audio frame in track 2 as well as in track 3 ends before the new cluster begins, and hence there is an audio gap. Well, not really.

When looking at the mkvinfo GUI output the first time, I was a bit excited about all that stuff and totally missed that there are two additional block groups in the second cluster. In contrast to single blocks, block groups do not show the contained frames unless they are expanded, which is not the case by default.

This morning I realized my mistake and expanded the two block groups. And of course, the missing audio frames were there.

I also have understood why the last frames of the two audio streams have been put into block groups: mkvmerge has automatically tagged the block groups with the correct duration. This is necessary because those last audio frames actually end after the timestamp of the next audio frame (in the next cluster). Setting the correct block duration hopefully makes a player stop outputting the respective audio frame after the block duration and seamlessly switch to the next audio frame.

In summary, external timestamp files make it possible to “simulate” the feature I was requesting here. This is absolutely great! A big kudo for those files …

Now I’ll investigate whether this method actually solves the nasty problems I’m after, but this depends on the behavior of the players (in particular, whether the players respect the block duration in the sense explained above, or if not, how they cope with a situation where the timestamp of the next audio frame is before the end of the current audio frame).

Best regards!